High Throughput Process Development in Biomanufacturing – Current Challenges and Benefits

Process development is and has always been a key component in successful scale-up of bioprocesses to commercial manufacture scale. At the Fourth High Throughput Process Development Conference in Toledo, Spain, several methods for high throughput process development were discussed for both upstream and downstream applications. Challenges and benefits were described along with ideas on where the industry can go from here. The proceedings were collected in a recently published report, “HTPD – High Throughput Process Development,” and include extended abstracts of talks presented at the conference. The report is very informative and I have summarized some key areas of focus in this article. We were also fortunate to be able to interview one of the conference chairs, Mats Gruvegard, GE Healthcare Life Sciences about what he saw as key takeaways from the conference and where he sees the future of high throughput process development heading.

Need for Better Industry Relevant Models

One of the subjects that came up in several of the presentations was the need for better, industry relevant models. Frequently one of the challenges of using models, either computerized or scale-down versions is that they don’t always hold true at commercial manufacturing scale or conditions. In which case, the model doesn’t save valuable resources, but rather wastes them.

In the first article, “Modified batch isotherm determination method for mechanistic model calibration,” authors Hahn et. al discuss how isotherm determination by 96-well filter plates with chromatography resins is preferred to column experiments because they are simpler to perform. However, there are steps of the model, specifically assuming an equivalent column volume and estimating the adsorption isotherm model parameters from the QC data that are problematic. Significant well-to-well differences and deviations from the expected equivalent column volume are common problems and often result in incorrect predictions of column experiments. The authors addressed these problems by using a modified method for fitting batch isotherms to mechanistic model equations that rely on applied and measured supernatant concentrations. Thus, an assumption of the resin amount in the well is no longer needed. This made the isotherm model more relevant and transferrable from small to large scale. (HTPD – High Throughput Process Development, pages 6-9)

In another article, “Scalability of mechanistic models for ion exchange chromatography under high load conditions,” authors Huuk et. al discuss the need to meet the evolving regulatory requirements for a Quality by Design approach to biomanufacturing. An important component of this is to have a thorough mechanistic process understanding. To achieve this you must implement fundamental models that reliably reflect industrial conditions. Specifically, can the model predict the highly overloaded conditions in preparative purification tasks and will the model parameters be transferable across different column scales. To address these issues, the authors present an industrial case study on an intermediate purification of a monoclonal antibody with high protein load density and low salt concentration in the protein sample. An unusual elution peak was observed and this cannot be modeled with the more commonly used equations for ion exchange chromatography. This case study supports the fundamental assumption of in silico scale-up and scale-down of chromatography, that only the fluid dynamics outside the pore system change. Once inside the pores, the same mechanism applies to robotic and laboratory-scale columns. Even for the observed complex adsorption behavior, the models calibrated from three gradients at 0.6 and 1 mL scale were able to accurately predict the 16 mL scale. A further scale-up to pilot and production scale is expected to work successfully as well. (HTPD – High Throughput Process Development, pages 10-12)

In the article “Progress and challenges in high throughput stability screening at Genentech,” authors Smithson et. al explain that since protein therapeutics are becoming more diverse, there is a need for high throughput screening methods to look at a wider range of formulation conditions with the ability to predict long term stability. However, this must be done using minimal material. They describe two studies, the first to look at the application of high-throughput methods to assess physical stability, particularly aggregate formulation. They were interested in looking at the differential between T onset and T agg, and the effect this had on aggregate formation due to protein unfolding as well as the role of temperature. They are further investigating how to use this information to inform better formulation and stability studies. They are also examining whether this information could permit formulation and shelf life risks to be identified early. Thus creating better predictive models for understanding thermal stress and shelf-life stability for mAbs.

In the second study, authors evaluated the use of miniaturized containers for formulation development. While scaled down models are often used in upstream and downstream process development, the challenge with using them in formulation studies has been a concern that changes in the material of contact during stability will not provide data that is relevant to final drug product containers. Authors looked to identify a miniaturized, automation friendly container that shows degradation patterns similar to the 2CC glass vials used for drug product. The authors tested a variety of glass and plastic containers against the 2CC glass vials for 4 weeks under thermal stress. The results showed that all glass vials had different levels of oxidation, none matching the 2CC glass vial. The plastic containers had relatively low levels of oxidation, not matching the 2CC glass vial either. Authors concluded that further studies were needed to see if there was an appropriate substitute. (HTPD – High Throughput Process Development, pages 42-45)

Scale-down models

Several articles focused on using scale-down systems to model process conditions. Scale-down models are powerful tools in both upstream and downstream process development. However, there are also at times differences with scale-down data and what is seen at lab-scale or at larger production. In scale-down chromatography models there can be poor resolution, low superficial flow velocities and intermittent flow, which impacts the results and limits the ability of the model to inform larger scale process development.

In one article highlighting scale-down models, “Applications of directly scalable protein A chromatography for high-throughput process development,” authors Scanlon et. al describe two case studies where authors demonstrate how the use of cellulose fiber membrane functionalized with protein A at 0.4 mL laboratory-scale and 60 µL 96-well plate format can be used complimentarily. The first case study involves the process development of a primary capture step on an industrially relevant mAb, varying process conditions such as the pH and concentration of elution and post-load wash buffers as well as flow rate. The second illustrates the integration of the capture step into upstream development by using the robust cellulose fiber matrix to handle centrifuged material from ambr™ 15 bioreactors to obtain samples for analysis during a cell line selection screen.

In both studies, authors demonstrated the utility of cellulose fiber devices as a tool for high throughput process development. Key advantages of the cellulose fiber devices include: small quantities of absorbent yield large amounts of data and because of the immediate mass transfer observed with the membrane absorbent, there is less complexity when scaling up. The 96-well format is good for areas where carryover could be an issue. (HTPD – High Throughput Process Development, pages 59-64)

Another abstract that looks at scale-down modeling is, “Comparison of relative resin content between pre-packed mini- and laboratory scale chromatography columns,” where authors Monie et. al point out the importance of understanding the difference of different column formats and the impact it has on scale-up. Authors compared dynamic binding capacity in mini-columns (RoboColumns) with dynamic binding capacity for lab-scale columns. They found that the two were in good agreement for results and that they could be used for parallel screening and optimization of dynamic binding capacity. There were small, but significant differences in resin dry weight content per column volume, which correlated with the difference in determined dynamic binding capacity between column formats. (HTPD – High Throughput Process Development, pages 23-24)

The use of RoboColumns was also explored in the article, “Understanding RoboColumn™ scale offsets and exploring the use of RoboColumn units as a scale-down model.” In the article, authors Chinn et. al seek a better understanding of how scale offsets vary with process parameters for RoboColumn units. While scale comparability has been examined, scale offsets have not. Authors demonstrate how scale offsets for monoclonal antibody yield, pool, volume and impurity clearance will vary with process parameters for two model bind-and-step-elute chromatography processes – cation exchange chromatography and protein A chromatography. By understanding how these scale offsets vary with process parameters, better prediction of bench-scale column performance and utilization for RoboColumns as scale-down models can be achieved. Authors summarize that “RoboColumn to bench-scale offsets are dependent of process parameters and driven by exacerbated peak broadening on the RoboColumn format. For bind-and-step-elute processes, yields across scales were found to be comparable except for very weak elution buffers, and pool volumes were found to be larger at RoboColumn scale. Impurity separation scale-comparability was found to be dependent of the mechanism of separation. Impurities separated by a step-elution were found to be comparable across all process parameters, while the scale offset of impurities separated by a wash phase was dependent of wash process parameters.” (HTPD – High Throughput Process Development, pages 46-49)

Increasing the use of Robotics and Automation

Automation was discussed several times in the report as a way to enable high throughput process development tasks. Often combined in the studies, were 96-well plate studies coupled with liquid handling equipment to automate time-consuming tasks and enable screening of many more experimental conditions.

One article, “How cell culture automation benefits upstream process development,” highlights the addition of automation to upstream process development. Author Musmann describes how Roche developed an automated, multi-well plate based screening system for suspension cell culture that is now routinely used in their late stage cell culture process development. The system is a fully automated workflow with integrated analytical instrumentation. Six 24-well plates are used as bioreactors and can be run in both batch and fed-batch modes. This permits capacity of up to 576 bioreactors in parallel. This system provides a high degree of parallelization and automation, sophisticated experimental designs, statistically sound data sets based on a large number of replicates and deeper process understanding for increased process control. One of the biggest challenges of the system was the handling of big data. To address this, software for high throughput applications using robotics (SHARC) was developed.

Musmann described using this system for three applications: 1) to identify levers to reduce trisulfides, 2) to increase product concentration without impacting product quality, and 3) in advanced clone screening to include a combination of clone evaluation and process optimization in one step. (HTPD – High Throughput Process Development, pages 17-22)

High Throughtput Process Development for other specific mAb applications

There are certain mAb processes that need a more specialized type of process development plan, for instance, biosimilars. In “Rapid process development of a cation exchange step for a biosimilar,” authors Teichert et. al, from both GE Healthcare and Alvotech, collaborated on this project to develop a cation exchange chromatography step. GE Healthcare’s extensive downstream bioprocessing experience was coupled with Alvotech’s biosimilar expertise and knowledge around the specific requirements for their molecule to create a process that reached all purification goals.

The team began by taking a standardized workflow and adapting it to a high throughput process development approach. They screened to select the optimal resin from three candidates, selected based on experience. Then they optimized running conditions, again initially selected based on experience, to meet the specific biosimilar requirements. The goal was to reduce aggregate levels to approximately 0.6% after the polishing step in conjunction with obtaining the highest yield possible for the monomer. High throughput process development experiments were performed in both 96-well plates and small-scale columns.

By working in collaboration and sharing expertise, high throughput tools were incorporated into process development for a purification process specifically tailored to meet the needs of this biosimilar. Using the Fast Trak standardized workflow meant initial running conditions were identified in just four weeks for further process development. (HTPD – High Throughput Process Development, pages 25-28)

High Throughput Processs Development for Non-mAb Processes

While most of the articles looked at mAb manufacturing, there was one example of implementing high throughput process development in an E. coli production system. In the article, “Integrated process development: case studies for high quality analysis of upstream screenings,” authors Walther et. al describe the development of a scaled-down, high throughput process development platform for E. coli. Using this platform they looked at recovery and purity of proteins from inclusion bodies. Steps in the platform included cell harvest, cell disruption, and inclusion body preparation, solubilization, refolding and chromatographic purification. All unit operations were performed in 96-well plates on liquid handling system (Tecan) except for cell disintegration, which was performed using a mechanical bead mill.

Authors looked at whether the platform was predictive from miniaturized to bench scale. What they found was that the platform could predict trends for product quality, recovery and purity. It was also used in supporting troubleshooting in upstream and optimization of fermentation processes. Use of this platform resulted in reduced time and material consumption. (HTPD – High Throughput Process Development, pages 38-41)

High Throughput Tools in Chromatography Development

In an article that addresses the supply of resin materials, “Towards understanding and managing resin variability.” Authors Hagwall et. al talk about how important it is that drug manufacturers understand the critical attributes in raw materials and that

attribute variability to generate process understanding.

QbD experiments are often centered around variability concerns.

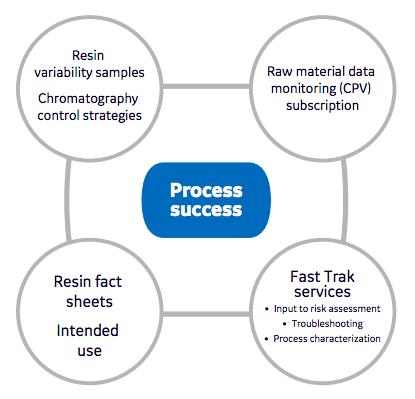

Authors explain that when resins are developed they are designed based on an established intended use. For processes where intended use, molecule, or process conditions differ significantly from the model used in development, the structure-function may no longer apply. In these instances the impact of raw material variability is very important. Authors explain that using available tools and collaboration with the supplier are important to understanding the risk assessment of robustness and possible characterization necessary for these non-intended use scenarios. The authors suggest tools including resin fact sheets, reference materials, resin variability sample sets and a methodology for residual risk management including raw material monitoring as important considerations in both early and late stage process development (Figure 1). (HTPD – High Throughput Process Development, pages 55-58)

We were fortunate to be able to interview Mats Gruvegard, GE Healthcare Life Sciences, Conference Chair, HTPD about key takeaways from the conference. Please see our interview below.

Why is high throughput process development such an important topic that it requires an entire conference?

Industrial bioprocess development is changing, primarily driven by two major factors. First factor is the need to keep down R&D costs of developing therapeutic candidates with simultaneous demand to speed the development timeline due both to competition as well requirements from escalating costs in the healthcare systems.

Second factor is increasing regulatory pressure to better control and understand critical process parameters. Therefore, the number of experiments that must be performed in a limited timeframe has increased. The benefits of HTPD including miniaturization, parallelization and automation, can help with both these factors. HTPD methods are now widely used in all areas of process development, from upstream processing to stable formulations. To date, these methods have proven especially well-suited for the development of robust downstream processes and there are many examples of routine utilization also for upstream processes, and this area continues to rapidly evolve.

When organizing this conference with your co-chairs Phillip Lester, Roche and Karol Łącki, Avitide, what were the topics that you really wanted to cover?

The objective for us as conference organizers was to show what is next in this field. Are we thinking enough of the future? What were the next “Big Things” that will take process development and HTPD to the next phase of evolution?

We also wanted to slightly expand the scope of the conference. HTPD is and will continue to be an important tool for efficient process development. However, there are complementary approaches including nanoscale process development, in-silico and mechanistic modeling, and other approaches to smart PD.

Were there things that you discovered during the conference that surprised or excited you about work being done in this area?

Probably the most significant advancement presented during the conference according to me personally, and to many other attendees was on the theme of nanoscale process development. Keith Breinlinger from Berkeley Lights presented their OptoSelect chip technology and how it could be used for improved clone selection. Breinlinger presented a platform that could automate cell line development by operating single cells in a nanofluidic environment. Using light, single cells were isolated in individual nanoliter-sized wells, and growth rates could then be simultaneously monitored on thousands of clones. Top producing clones were selected by titer and growth and could potentially be ready to be scaled up to bioreactor.

Where do you see HTPD moving in the future?

Knowledge-based process development based on HTPD, in-silico and mechanistic modeling will become more important and more utilized in the industry. It also clear that the scale in development has the potential to go down even further, as shown by Berkeley Lights as well as in the key note presentation by Marcel Ottens at Delft University of Technology in the Netherlands. Ottens presented work with a novel microfluidic system on a lab chip and micro-columns in the size of 50-70 nL.

Furthermore, the application of HTPD to newer complex modalities (viral vectors, exosomes etc.) will continue to grow and the industry needs to develop strategies for overcoming emerging challenges with these targets.

The first HTPD conference happened in 2010. Since then, what have been the major achievements and where do we still need to go to realize the full value of HTPD?

The main goal of this scientific conference has not changed since the very first meeting in 2010. It is still to provide a leading forum for discussion and exchange of ideas surrounding the challenges and benefits of employing high-throughput techniques in the process development of biopharmaceuticals. The HTPD conference series started as a joint initiative from GE Healthcare and Genentech at a time when high throughput process development was in the early days and mainly limited to 96-well plates filled with chromatography resins used for resin selection and initial screening of running condition. Since then, HTPD in chromatography applications has been well established among many biopharmaceutical companies but also expanded to upstream and cell culture screening as well as to the formulation applications.

Currently there is also a significant difference in the maturity of HTPD and smartPD approaches between different companies. I believe it is necessary for more companies to learn and understand these techniques, and the HTPD conference could be a good starting point.

During the four days in October in Toledo last year it was obvious that HTPD is still rapidly progressing. I expect further progress in all areas discussed.

Two examples of remaining challenges include:

- Analytical bottlenecks – a limited number of quick methods exist for determining critical quality attributes, especially for biomolecules that are not monoclonal antibodies

- Efficient processing of large datasets – there are concerns in the industry about data analysis and management also relate to software that enables the management of datasets and creation of databases

Have you begun planning the next HTPD conference?

Yes, it is a great pleasure to announce the 5th international HTPD conference. In November 2019 we will be in Porto, Portugal. Program outline is ready, chairmen accepted, and I encourage all that have an interest in biopharmaceutical process development and wants to learn, explore or disseminate new ideas and information to join us in Porto. Visit https://www.htpdmeetings.com for more information.

To learn more, please read “HTPD – High Throughput Process Development – Extended Reports”.

Please see related articles:

- Utilizing High-Throughput Process Development Tools to Create a Purification Process for a Biosimilar Molecule

- Implementing Digital Biomanufacturing in Process Development